Research & Projects

Selection of research interests and scientific projects

Quantum Computing

Quantum computing is set to transform how we approach complex calculations and simulations across physics, including high-energy and particle physics. By harnessing the unique properties of quantum mechanics, quantum computing holds the promise of addressing challenges that are computationally prohibitive on classical machines. My research focuses on two core areas where quantum computing can have a significant impact: Quantum Machine Learning, specifically Generative Models, and Quantum Hamiltonian Learning.

Quantum Generative Models

Generative models play a crucial role in particle physics by capturing the probabilistic distributions of particle interactions and substructures. Quantum Generative Models, specifically Quantum GANs and Quantum Boltzmann Machines (QBMs), build on this approach by utilising quantum states to represent and generate data distributions that are computationally challenging for classical models. My research in this area includes:

-

Quantum Boltzmann Machines (QBMs): I am also investigating the application of Quantum Boltzmann Machines for analysing jet substructures. This approach leverages QBMs’ unique ability to capture intricate correlations within high-dimensional data. Our published work [1] demonstrates the potential of QBMs to model complex particle interactions.

Summary of the main result: Fully-visible BMs can generate distributions that match their connectivity. A nearest-neighbour connected BM can represent a distribution generated by nearest-neighbour statistics. We show that a fully-visible QBM, on the other hand, can represent distributions generated by higher-dimensional models. Alternatively, a classical RBM, consisting of visible (blue) and hidden (hollow, white) units, can also learn both of these distributions presented; however, it requires additional hidden units for this purpose.

-

Quantum GANs for Jet Substructure: By applying Quantum GANs to jet substructure modelling, we aim to reveal insights into the dynamic processes underlying particle jets. Quantum GANs provide a novel tool to approximate and generate realistic particle distributions, pushing the boundaries of data-driven simulations in high-energy physics. I presented preliminary results on this work at the ML4Jets workshop in Paris, highlighting its potential for advancing our understanding of particle physics processes.

Preliminary Quantum GAN results on jet substructure modelling, presented at ML4Jets in Paris.

Quantum Hamiltonian Learning

Quantum Hamiltonian Learning offers a powerful framework for estimating parameters within quantum systems, with applications that extend to the Standard Model Effective Field Theory (SMEFT). In particle physics, Hamiltonian learning can help refine our understanding of fundamental parameters and test Standard Model predictions with unparalleled precision.

Through these research projects, I am committed to advancing the integration of quantum computing in particle physics, pushing the boundaries of what’s possible in theoretical and experimental analysis. Each project is a step towards bridging quantum computational models with real-world particle physics applications, enhancing our ability to explore and understand the universe at its most fundamental level.

Quantum Sensing and Chromaitc Calorimetery

The chromatic calorimeter concept introduces an innovative approach in high-energy physics calorimetry by integrating Quantum Dot (QD) technology within traditional homogeneous calorimeters [1]. This method leverages the unique emission properties of quantum dots to potentially achieve enhanced energy resolution and depth information, making it a promising candidate for future collider experiments where precision is paramount. This concept is also highlighted in the ECFA Detector R&D Roadmap, specifically within the DRD5 report on quantum and emerging technologies [2].

Concept of Quantum dot powered chromatic calorimeters

Chromatic calorimetry utilises the narrow, tunable emission spectra of quantum dots embedded within a transparent, high-density material to create a colour-coded representation of particle showers. This design aims to provide continuous longitudinal segmentation of the shower profile, which is critical for precise energy measurement and particle identification. In this configuration:

- Quantum Dot Emission Tuning: Quantum dots are selected for their ability to emit light at specific wavelengths, which can be controlled by adjusting their size. Larger quantum dots at the front are intended to emit longer wavelengths, while smaller quantum dots deeper within the calorimeter are designed to emit shorter wavelengths.

- Energy and Depth Measurement: With this layered setup, each zone is expected to absorb specific wavelengths and pass others, capturing both energy deposition and depth information. This polychromatic structure functions as an embedded wavelength shifter, mapping radiation intensity to fluorescence light across a spectrum of wavelengths.

Conceptual illustration of chromatic calorimetry using quantum dots for precise energy and depth measurement in high-energy physics.

Initial tests at CERN

With collaborators at CERN, we have performed initial beam test experiments conducted at CERN [3], where we combined various scintillating materials (GAGG, PWO, BGO, and LYSO) with different emission properties in the geal of validating the chromatic readout and reconstruction. The test beam results demonstrated clear analytical discrimination between electron and pion events, with the reconstructed energy signals revealing valuable separability between hadronic and electromagnetic interactions at higher energies. The signals also exhibited a distinct evolution concerning the incoming particle's energy.

Applications in Particle Physics and Future Directions

Chromatic calorimeters hold promise for high-energy physics experiments, offering capabilities for precision measurements in particle tracking and energy reconstruction. At facilities like CERN and in projects such as the Future Circular Collider (FCC), these advancements could enable better isolation of rare decay events and a deeper understanding of Standard Model and Beyond Standard Model phenomena.

Ongoing research focuses on refining this technology, particularly addressing challenges such as photon re-absorption and the radiation hardness of the quantum dots. These efforts aim to ensure that chromatic calorimetry can meet the rigorous demands of next-generation particle physics experiments.

Standard Model properties and rare processes

Data from Run I and Run II LHC have confirmed the predictions of the Standard Model (SM), but have not provided any signs of new physics beyond the Standard Model (BSM). I am convinced that the key to uncovering BSM physics lies in rigorous testing of the SM through precise measurements and the investigation of rare processes. The upcoming phase of the LHC presents an excellent opportunity for such explorations.

Candidate event in which a W and a Z boson are produced in association with two forward jets. An electron from the decay of the W is represented by the green line (its trajectory) and the green boxes (representing its energy deposit in the electromagnetic calorimeter). The Z decays into two muons, represented by the red lines and the red boxes (representing the muon chambers). The two jets are represented by the orange cones. Event display taken from here.

In this context, Vector Boson Scattering (VBS) and Vector Boson Fusion (VBF) are fundamental predictions of the SM, rooted in the non-Abelian gauge structure of electroweak interactions. While studying Higgs resonances remains a primary avenue for identifying inconsistencies with the SM, VBS processes also offer promising leads. Specifically, Electroweak Symmetry Breaking (EWSB) governs vector boson scattering and prevents issues like unitarity violation and high-energy divergences. Any alterations in the Higgs boson's couplings to vector bosons could disturb this equilibrium, potentially leading to notable increases in VBS rates. This makes VBS an ideal candidate for a model-independent search for new physics.

In line with this, I joined the Standard Model Physics Group (SMP) at CMS in 2020 and took on the role of convener for the Diboson Group (SMP-VV). My responsibilities include overseeing and leading various analyses, from inception through to the internal review process for publication. The group's focus is on producing results related to total fiducial and differential cross-sections, as well as VBS and anomalous couplings measurements, using two or more vector bosons in the final state.

Additionally, I am actively involved in two analyses that employ Effective Field Theory (EFT) interpretations to search for BSM physics. These analyses focus on the inclusive and VBF ZZ() processes.

Upgrade of the CSC Muon System for the CMS Detector at the HL-LHC

During the LHC's second long shutdown, I led the critical upgrade of the CMS forward muon system's Cathode Strip Chambers (CSC), detailed in [1]. This undertaking, essential for meeting the High-Luminosity LHC (HL-LHC) challenges, involved a comprehensive overhaul of the CSC electronic readout to handle increased particle flux, trigger latency, and rates. I oversaw the replacement of electronic boards in all 180 chambers, focusing on the innermost chambers closest to the LHC beam and coordinating the CSC retrofitting team. This task was complicated by the COVID-19 pandemic, during which I, alongside a small group of dedicated students, led the electronics upgrades and testing amid lockdowns.

Photo of myself and a student replacing the electronics of the CMS CSC's during the LHC's second long shutdown.

In 2019, we encountered a significant issue: the optical transceivers on the upgraded on-chamber boards showed signs of overheating. To address this, I designed and tested various copper cover prototypes at CERN's building 904. This process was instrumental in selecting the most effective design to mitigate the overheating problem, ensuring the smooth functioning of the upgraded CSC system in the challenging conditions of the HL-LHC.

Post the CSC subsystem upgrade, my focus shifted to the development and testing of the new CSC Front End Driver (FED), crucial for data recording from the CSC and Gas electron multiplier (GEM) subsystems. The upgrade employs the Advanced Telecommunications Computing Architecture (ATCA) and is expected to handle up to 600 GB/s of data, aiming for a total acquisition rate of 1.3 Tb/s. My recent efforts involve testing the prototype X2O board, with tasks like validating the combined GEM/CSC data aquisition systems configuration still ahead.

Dark Matter Search

Since joining Northeastern University in 2018, my research has been centred on uncovering the nature of Dark Matter (DM), which is known to interact gravitationally and potentially weakly with Standard Model (SM) particles. My focus has been on the Mono-Z approach, involving the associated production of DM candidates with a Z boson, a method that looks for events where a Z boson recoils against missing transverse momentum in proton-proton collisions at the LHC.

Our analysis [1] used data from the CMS experiment, spanning 2016-2018 and corresponding to an integrated luminosity of 137 fb at 13 TeV. The study aimed to explore physics beyond the SM through various theoretical frameworks:

- Simplified DM models featuring vector, axial-vector, scalar, and pseudoscalar mediators.

- Invisible decay channels of a SM-like Higgs boson.

- A two-Higgs-doublet model with an additional pseudoscalar.

In addition to DM-centric theories, alternative models like large extra dimensions and unparticle production are considered due to their potential to produce similar experimental signatures. Despite extensive analysis, no deviations from the SM have been observed. Our current results extend the exclusion limits beyond those established by earlier studies, which analyzed partial data samples. Specifically, the extended limits pertain to simplified DM mediators, gravitons, and unparticles. In the context of a SM-like Higgs boson, our findings set an upper limit of 29% for its invisible decay probability at a 95% confidence level.

90% CL upper limits on DM-nucleon cross sections for simplified DM models, showcasing both spin-independent (left, with vector couplings) and spin-dependent (right, with axial-vector couplings) cases. Quark coupling is gq= 0.25, DM coupling g_\chi= 1. Includes data from key experiments like XENON1T and LUX (spin-independent), and PICO-60 and IceCube (spin-dependent).

Further, the analysis addresses the two-Higgs-doublet model, probing pseudoscalar mediator masses up to 440 GeV and heavy Higgs boson masses up to 1200 GeV, under specified benchmark parameters. The comprehensive examination of the complete Run 2 dataset in the Mono-Z analysis not only imposes stringent limits on DM particle production across various model types but also facilitates the comparison of these results with direct-detection experiments. This comparison encompasses both spin-dependent and spin-independent scattering cross-sections and extends to constraints on invisible Higgs boson decays, unparticles, and large extra dimensions.

Quark/Gluon tagging for Vector Boson Fusion/Scattering

During Les Houhces 2019 [1], I have explored the possibility to employing quark/gluon (q/g) jet tagging techniques to tag vector boson fusion (VBF) and vector boson scattering (VBS) processes. The jets associated with VBS and VBF processes at leading order are predominantly quark-originate, therfore q/g tagging can be instrumental in separating electroweak signals from the continuum of quantum chromodynamics (QCD) backgrounds. It's particularly valuable in Higgs production, aiding both in isolating the Higgs from Standard Model backgrounds and discerning different Higgs production modes, including the separation of gluon fusion and VBF Higgs production.

The methodology explored during Les Houhces 2019, involves advanced jet classification techniques, including the use of boosted decision trees and the XGBoost library for machine learning-based analysis. The approach integrates jet-substructure variables such as jet angularities and jet multiplicity, along with event-level kinematic variables. These variables are processed through different models, each trained across various kinematic bins. The strategy effectively employs q/g tagging to differentiate between VBF and gluon-gluon fusion Higgs production, leveraging recent advances in tagging technology and anticipated detector upgrades.

ROC curves for separating VBF Higgs production from ggH Higgs production. The three plots are distinguished by their values, indicated above. The three colored lines in each plot correspond to ROC curves for various levels of information used in the classifiers: jet kinematic information only (blue), additionally including jet constituent track multiplicity (orange), and also including a suite of angularities for the two jets (green)

The study's findings highlight the nuanced interplay between q/g tagging and other event selection criteria. It reveals that adjustments in traditional analysis requirements, such as dijet invariant mass, can optimise the use of q/g tagging. The research demonstrates that while higher dijet mass requirements bias jet distributions to be more forward, there are potential gains in performance by considering simultaneous optimisation of q/g tagging with other variables. This insight paves the way for future research to further explore the correlation between q/g tagging, event kinematic features, and other jet substructure observables.

Simplified Template Cross Sections (STXS)

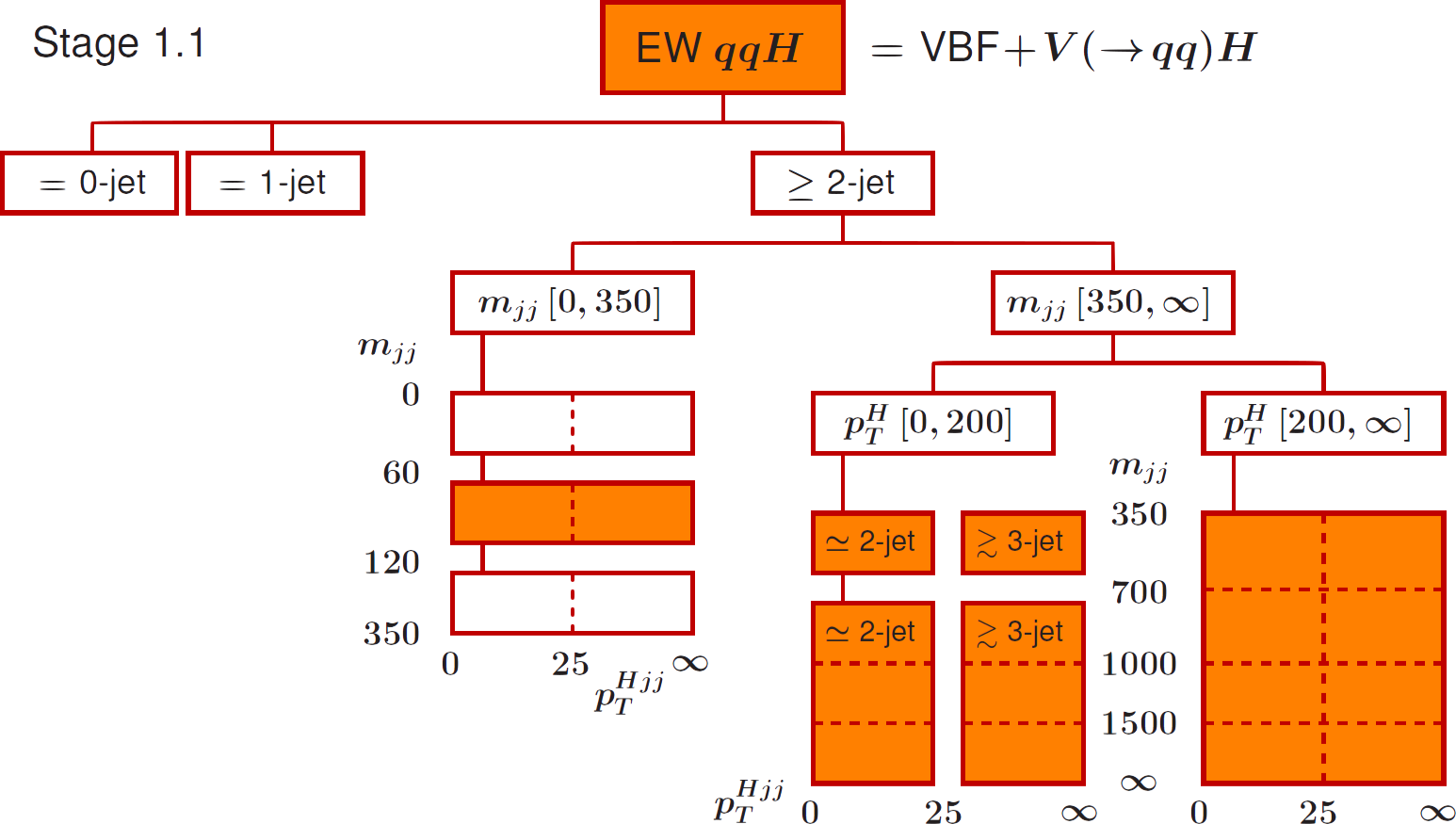

In our paper [1] titled "Simplified Template Cross Sections - Stage 1.1," we delve into the advancements in the framework of Simplified Template Cross Sections (STXS), an approach adopted by LHC experiments for Higgs measurements. STXS aims to minimise theoretical uncertainties in measurements and allows combining measurements from different decay channels and experiments.

This paper introduces the revised definitions of STXS kinematic bins (stage 1.1), developed in response to insights gained from initial STXS measurements and theoretical discussions. This new stage is designed to be used for the full LHC Run 2 datasets by ATLAS and CMS, with a focus on the three main Higgs production modes: gluon-fusion, vector-boson fusion, and association with a vector boson.

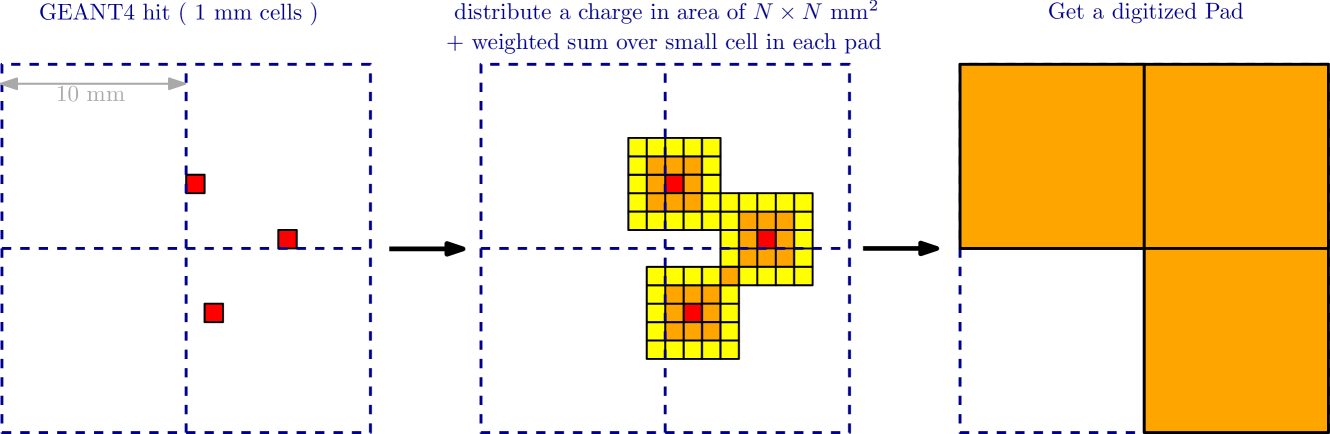

Schematic description of the working principle of the digitizer. A matrix of of cell with corresponding weight is created around the simulated cell (GEANT4 hit). A weighted sum is calculated for each pad (bounded by the dashed lines). The digitized pads are the ones which reach the threshold.

Significantly, we introduced sub-bin boundaries in stage 1.1, aimed at improving the handling of residual theory uncertainties in signal distributions. This granularity, with the inclusion of sub-bins, is expected to surpass experimental sensitivity in the full Run 2 datasets, marking a forward step in precision Higgs measurements.

STXS, defined in mutually exclusive regions of phase space, serve as physical cross sections. The framework's design minimises reliance on theory uncertainties, maximises experimental sensitivity, isolates possible BSM effects, and maintains a manageable number of bins. The definitions for final-state objects in STXS are kept simpler and more idealised compared to fiducial cross-section measurements, allowing for the combination of various decay channels.

Vector Boson Fusion theoretical uncertainties in stage 1.1

Once the stage 1.1 define, the theoritical uncertainties remained to be determined. I have worked with collaborators from ATLAs and CMS to implemented a prescription to assign theoretical uncertainties to VBF production mode in various kinematic regions A framework [2] that is currenltly used by both Atlas and CMS collaborations. This has then become now the LHC Higgs Working Group official recommendation for estimating electroweak Higgs + 2 jets process theoritical uncertainties (checkout this talk to learn more).

Byond Run2 and CP-sensitive binning

During Les Houches 2019 [3], I have epxlored with few collegues possible extention to inlude CP senstive bins. In the Standard Model, Higgs interactions preserve CP symmetries, so any deviation signifies potential physics beyond the Standard Model. The Vector Boson Fusion process, a key production channel at the LHC, is especially notable for testing the CP properties of the Higgs due to its distinct kinematic structure with two forward tagging jets.

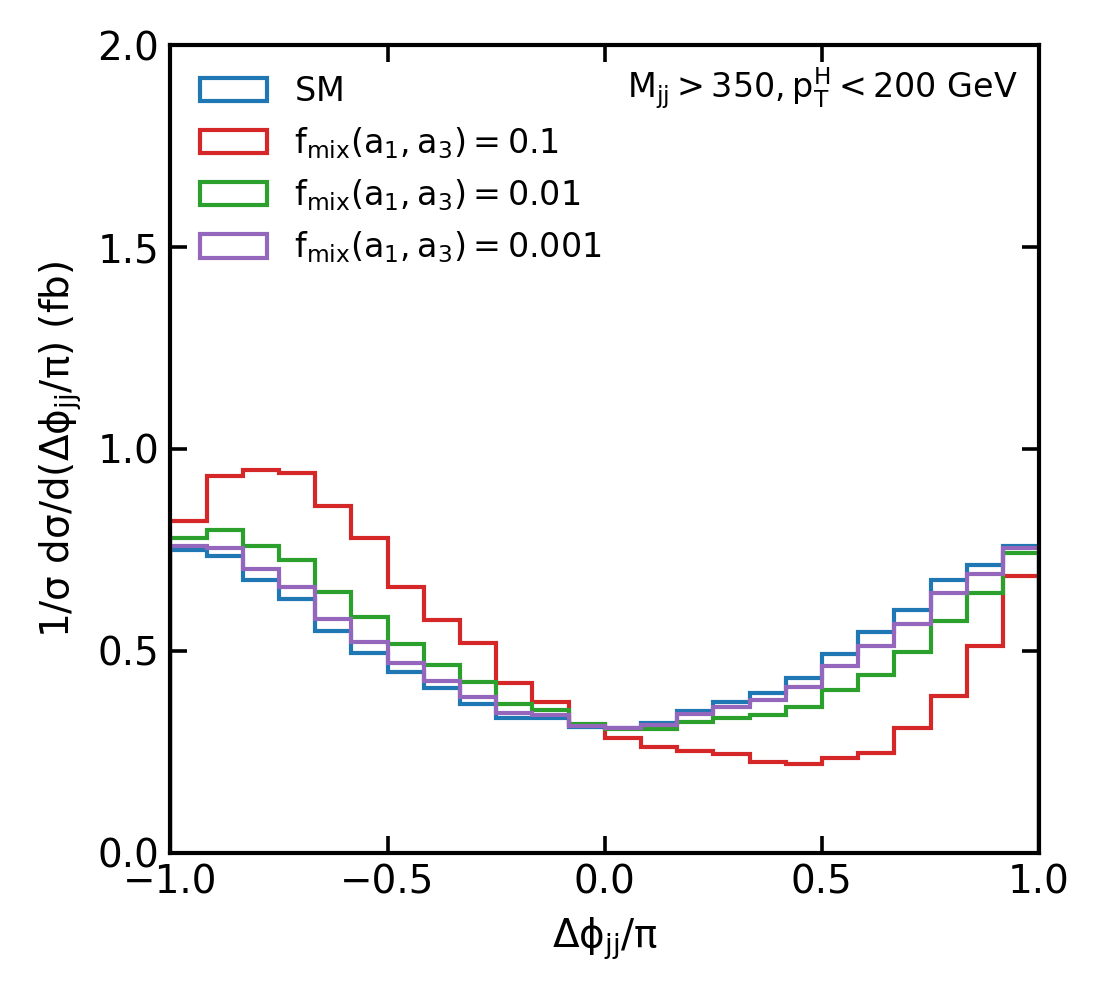

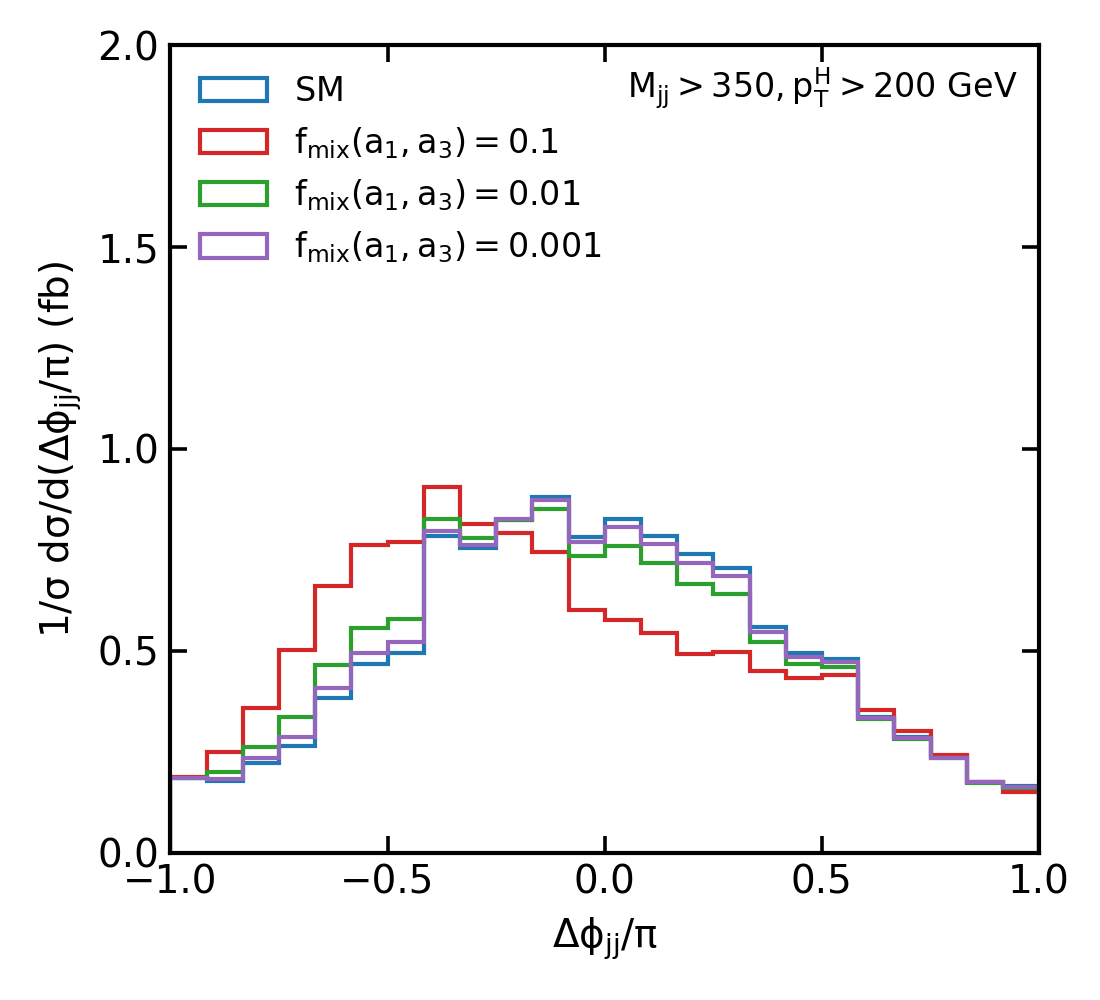

Normalised distributions of the jet-jet signed azimuthal angle difference for various mixed CP scenarios between SM and CP-odd (c, d) couplings. Colours represents different mixing strength values = 0.1%, 1%, 10%.

The study explores the tensor structure of HVV couplings using the azimuthal angle between tagging jets in VBF processes. This angle serves as a critical tool for differentiating CP-even and CP-odd couplings. The study employs the JHUGEN generator interfaced with PYTHIA for simulation, focusing on azimuthal angle distributions to discern CP properties. The Simplified Template Cross Sections framework is refined to include signed azimuthal angle distributions, enhancing the sensitivity to BSM deviations in Higgs boson measurements.

The analysis reveals distinct behaviours in azimuthal angle distributions corresponding to CP-even and CP-odd couplings. Deviations in these distributions would suggest potential CP-violation in the Higgs sector. The study proposes new CP-sensitive binning strategies for azimuthal angle distributions in VBF processes, facilitating a more nuanced understanding of the HVV coupling and CP properties of the Higgs boson. This approach could significantly impact future LHC measurements, offering a refined method to probe for new physics.

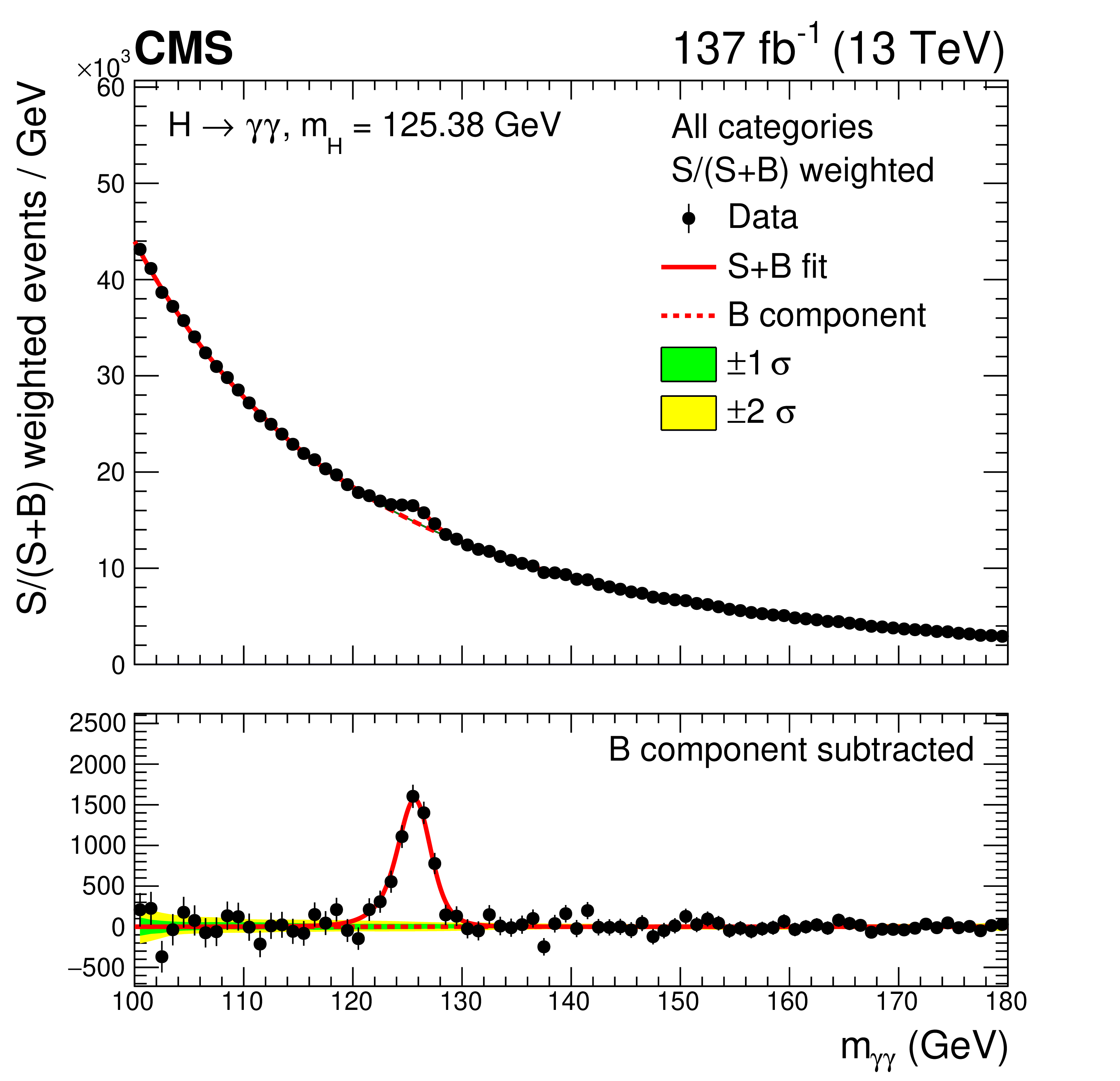

Higgs properties in diphoton decay channel

Since joining the CMS collaboration in 2014, my research has been dedicated to measuring the Higgs boson's properties in the diphoton decay mode. This choice was driven by the pivotal role of this process in the Higgs boson's discovery in 2012. The diphoton decay mode is characterized by its exceptional mass resolution and effective background control, making it an ideal channel for precise measurements of the Electroweak Symmetry Breaking (EWSB) mechanism. As one of the main analysts, I significantly contributed to the first results derived from 13 TeV LHC collisions [1].

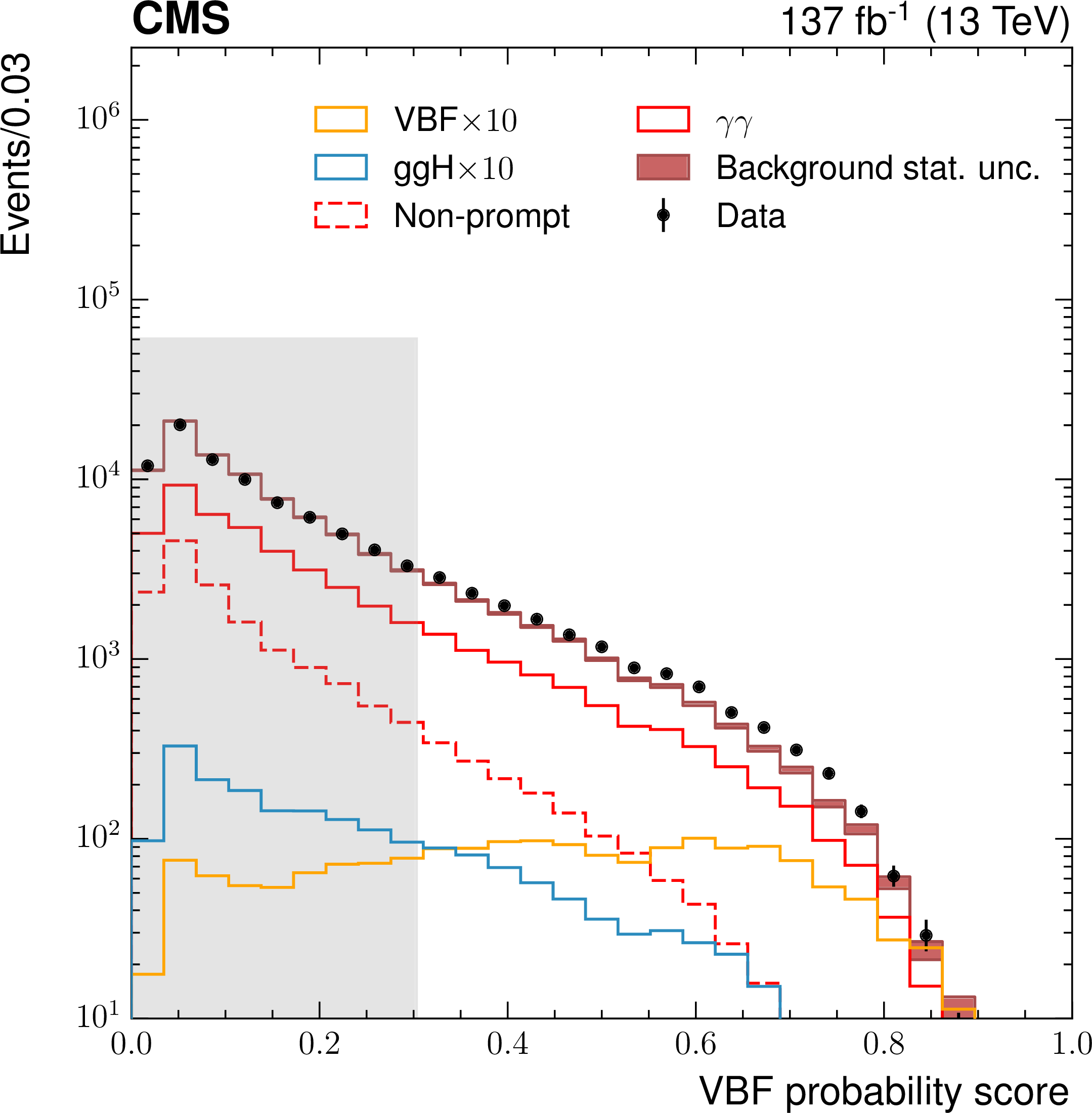

Furthermore, my research has focused on the Vector Boson Fusion (VBF) process, the second-most prevalent mode of Higgs boson production at the LHC. VBF's unique kinematics, marked by two tagging jets in opposing directions and small angles relative to the central signal decay products, render it a highly sensitive channel for studying Higgs boson properties. I have pioneered various modern Machine Learning-based (ML) classification algorithms, where these approaches have significantly accelerated training and tagging performances compared to traditional methods and tools. It has also enhanced background rejection and reduced gluon-gluon fusion contamination, thereby improving the control over associated theoretical uncertainties [2][3][4].

Software digitiser for highly granular gaseous detectors

In the paper [1], we addressed the need for advanced digitisation in calorimeters equipped with gaseous sensors, particularly for calorimeters with a large number of channels (Highly granular or imaging calorimeters). In this paper we propose a method to simulate the pad response in calorimeters, which is crucial step for accurately mimicking the energy deposited by charged particles inside the detector as serves as a complementary plugin to general purpose simulation like Geant4.

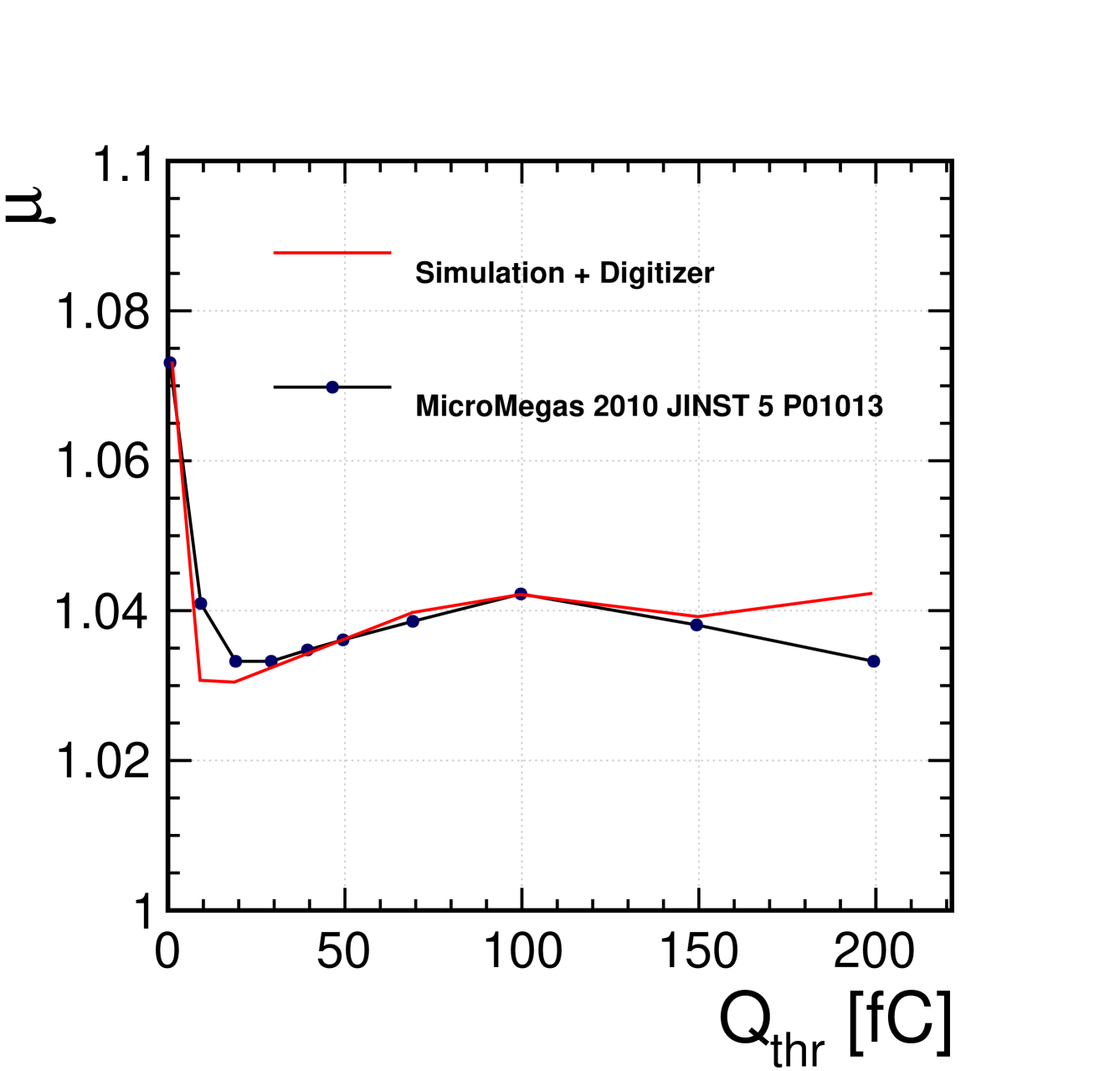

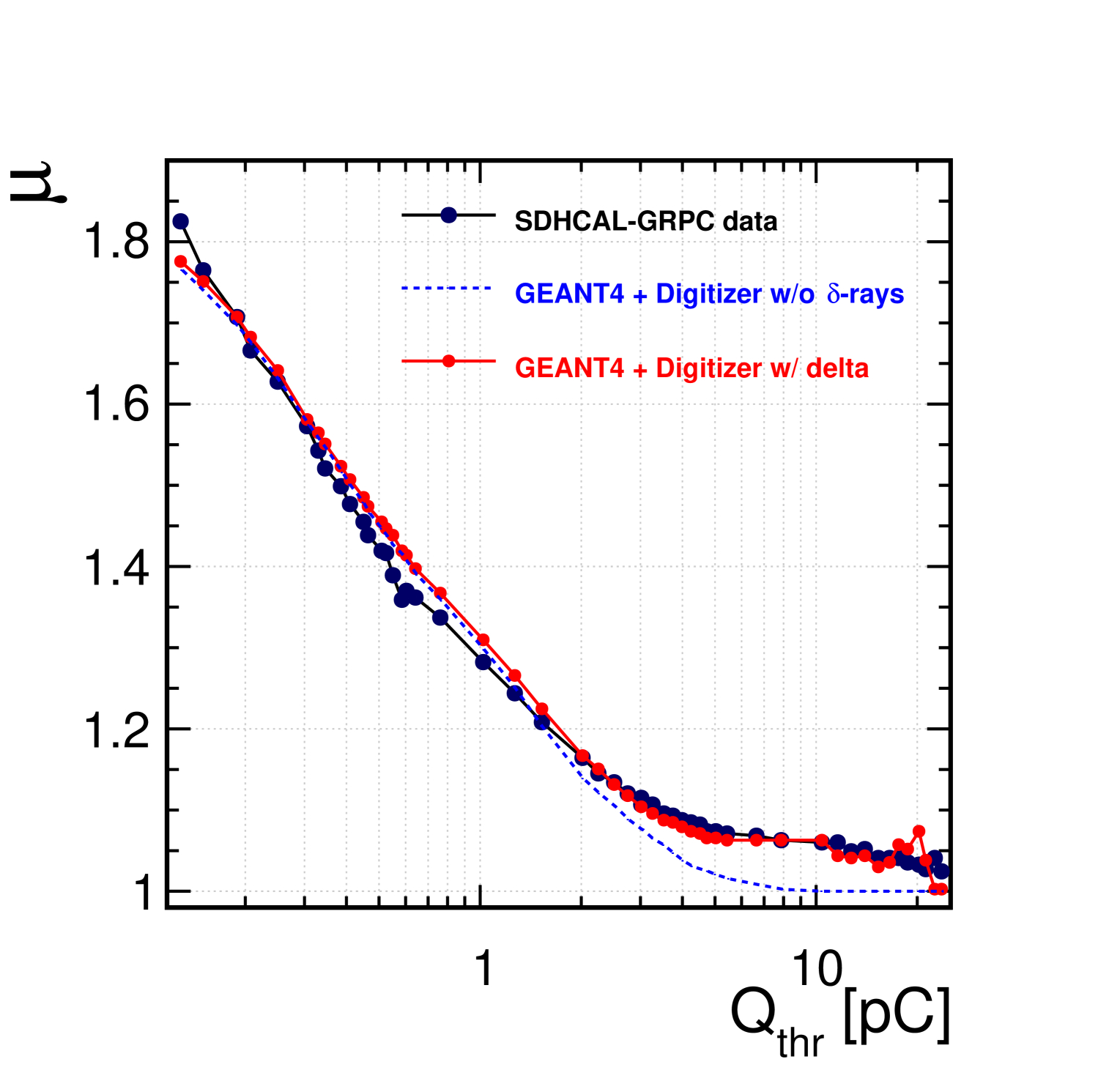

The digitisation process involves replicating pad responses to energy deposits, determining induced charges for each pad, and accounting for charge sharing between neighbouring pads. This method was applied to Glass Resistive Plate Chambers (GRPC) and MicroMegas detectors, with the validation done using test beam data to reproduce parameters like efficiency, multiplicity, and mean number of hits at varying thresholds with high accuracy.

The digitisation of the high granular gaseous detector involves several key steps:

- Charge Determination: For each simulated hit from the GEANT4 simulation, the induced charge is randomly assigned following the induced charge spectrum probability distribution.

- Charge Distribution: This charge is then distributed to pads based on the position of the GEANT4 hit. The fraction of charge attributed to a pad depends on the detector specifics and pad size.

- Threshold Determination: A pad is considered "fired" if the induced charge is above a certain threshold set for that pad.

Schematic description of the working principle of the digitizer. A matrix of of cell with corresponding weight is created around the simulated cell (GEANT4 hit). A weighted sum is calculated for each pad (bounded by the dashed lines). The digitized pads are the ones which reach the threshold.

This procedure aims to replicate the pad response to energy deposits accurately, which is crucial for realistic simulations in particle detectors like GRPC and MicroMegas. The method effectively models sensor and electronic responses, allowing for more precise simulations in particle physics research.

Multiplicity of MicroMegas and GRPC versus dtection threshold. The red and the black lines represent the simulation (GEANT4 + digitizer) and measured sensor data. The dashed blue line represents the use of the digitizer without including the -rays effect.

In a followup paper [2] titled "Resistive Plate Chamber Digitisation in a Hadronic Shower Environment" we concentrated on simulating the prototype's response using a different digitisation algorithm called SimDigital, to convert simulated energy deposition into semi-digital information. Unlike the previous algorithm, this approach does the integration charge distribution to share an amplitude to each pad. The results show good agreement with hadronic shower data up to 50 GeV, but discrepancies at higher energies suggest that proton contamination doesn't fully explain the variance between data and simulation at these energies. Although this method could be more accurate, when it comes to simulating even higher number of channels, the previous method might have an advantage as it has the potential to be parallelised without doing analytical integration.

High Rate Resistive Plate Chamber for LHC detector upgrades

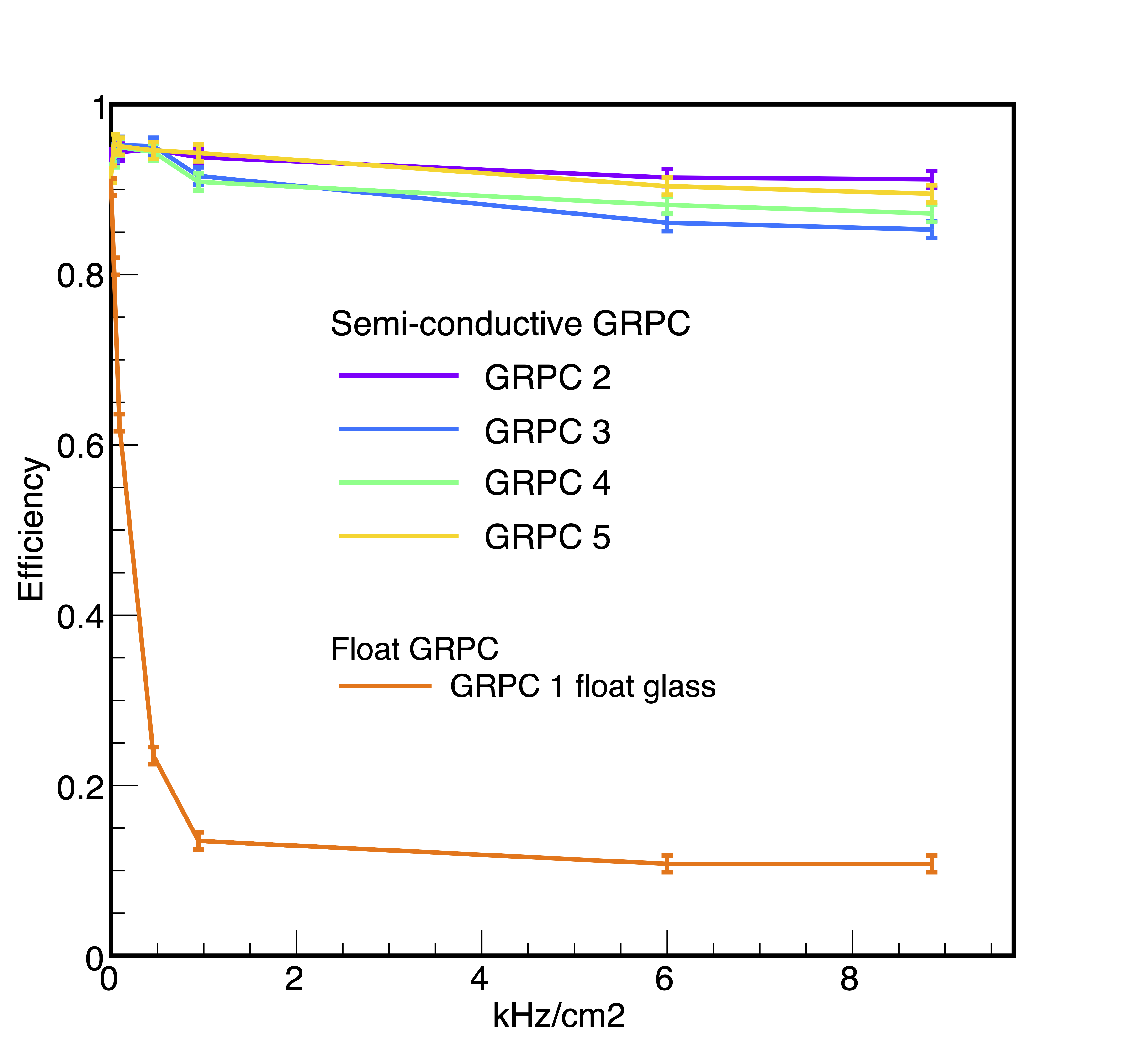

The bakelite resistive plate chambers (RPCs) used as muon detectors at the Large Hadron Collider (LHC) experiments have inherent limitations in detection rate restricted their deployment in high rate regions of both CMS and ATLAS detectors. Addressing this challenge, we have explored an innovative alternative: RPCs constructed with low resistivity glass plates, specifically at a resistivity of . Preliminary tests we have conducted at DESY provided promising results [1], indicating that these detectors could operate at a few thousand Hz/cm^2 while maintaining an efficiency greater than 90%.

The underlying mechanism of the Glass RPC (GRPC) detector is rooted in the ionisation produced by charged particles traversing a gas gap. This gap, precisely 1.2 mm, is filled with a specialised gas mixture comprising 93% TFE (CF), 5% CO, and 2% SF. When subjected to a high voltage ranging between 6.5 kV and 8 kV, the system ensures charge multiplication in an avalanche mode, achieving a typical gain of 107107. The standout feature of this detector is the doped silicate glass's low resistivity, a marked improvement from the typical of float glass. To capture the signals, copper pads were employed, which then interfaced with a semi-digital readout system.

To rigorously assess the performance of these RPCs, an extensive test was set up at DESY in January 2012. Utilising the DESY II synchrotron, known for its electron beam with energies up to 6 GeV and a maximum particle rate of 35 kHz, the team could simulate real-world conditions. To measure the beam rate, two scintillator detectors were strategically positioned upstream of the main detector. Additionally, a GRPC made with standard glass was integrated into the experimental setup, serving both as a control and a benchmark.

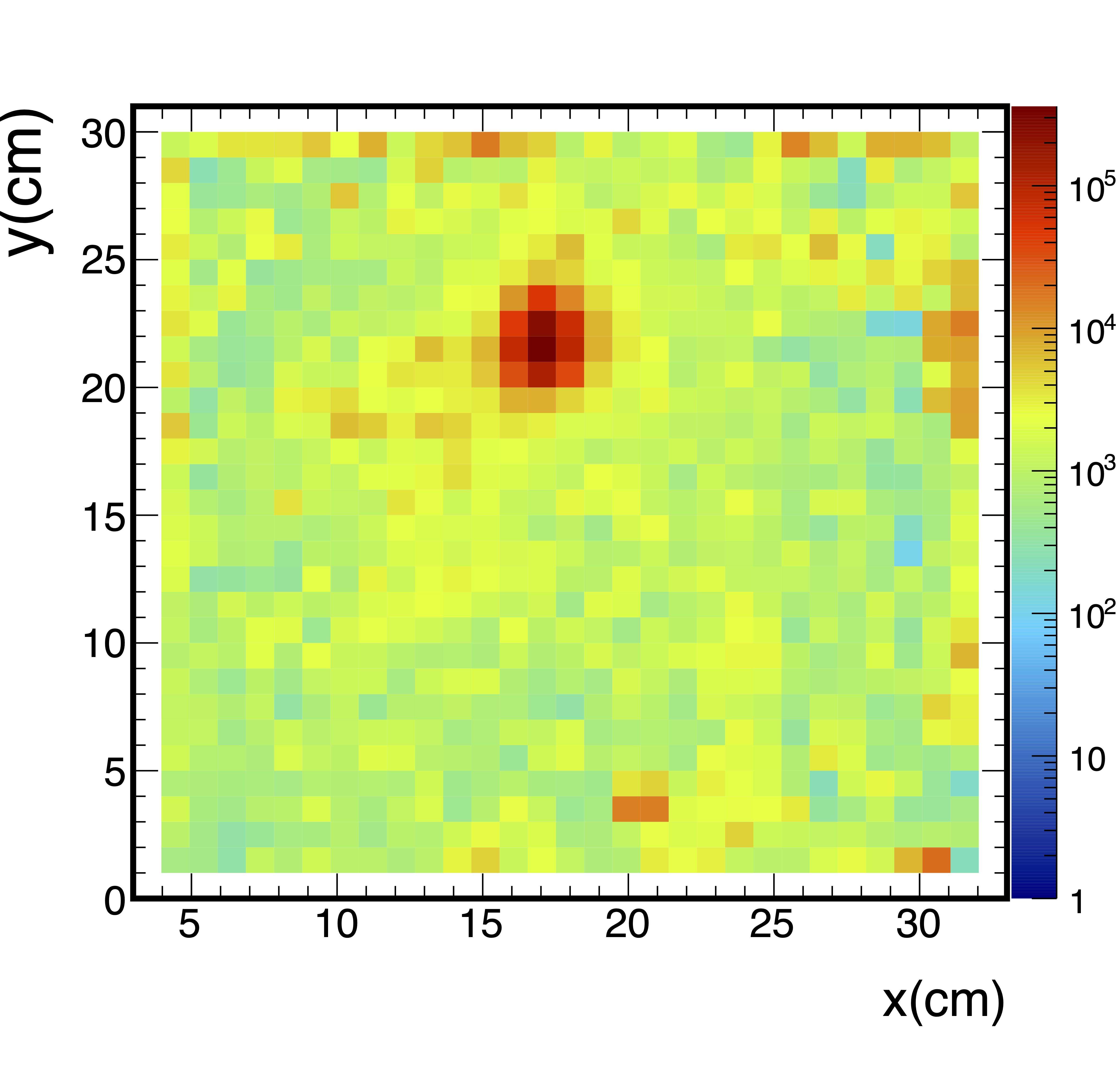

The outcomes of these tests were illuminating. Local efficiency and multiplicity of the GRPC were meticulously measured. The efficiency, representing the fraction of tracks with a multiplicity greater than or equal to 1, and the multiplicity, defined as the number of fired pads within 3 cm of the expected position, were both evaluated against the polarisation high voltage. With the scintillator detectors' data, the team derived the total particle flux, subsequently calculating the rate per unit area. These empirical findings underscored the potential of the GRPC, heralding a new era for muon detectors in high-energy physics experiments.

High granularity Semi-Digital Hadronic Calorimeter: SDHCAL

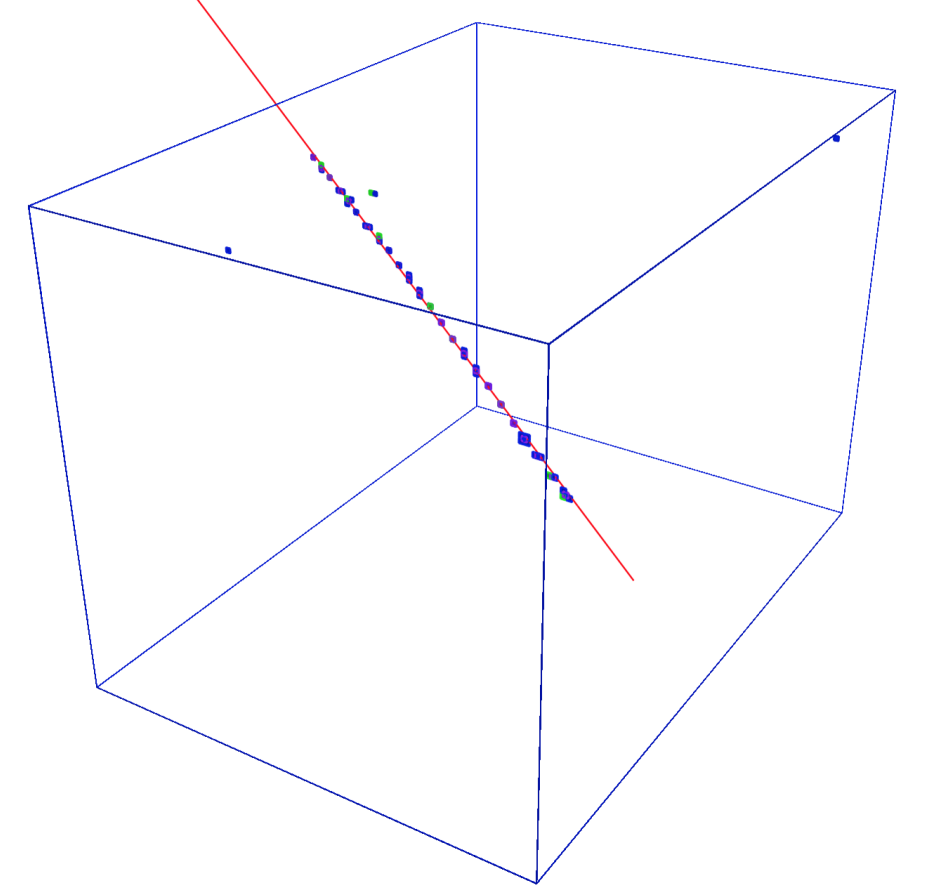

The idea of the Semi-Digital Hadronic Calorimeter (SDHCAL) originated from the need for highly granular calorimeters in future high-energy physics experiments, such as those proposed for the International Linear Collider (ILC). The goal was to design a calorimeter capable of precise energy measurement of jets, integrating tracking capabilities essential for Particle Flow Algorithms (PFA). The SDHCAL was built as a sampling calorimeter with 48 active Glass Resistive Plate Chambers (GRPC) layers incorporating embedded readout electronics. Its innovative design focuses on high granularity, compactness, and efficient power consumption. The GRPCs were constructed with glass plates, resistive coating, and a unique gas distribution system to enhance performance. I had the changce to work on this project from it's early begenings and it was the subject of my PhD thesis.

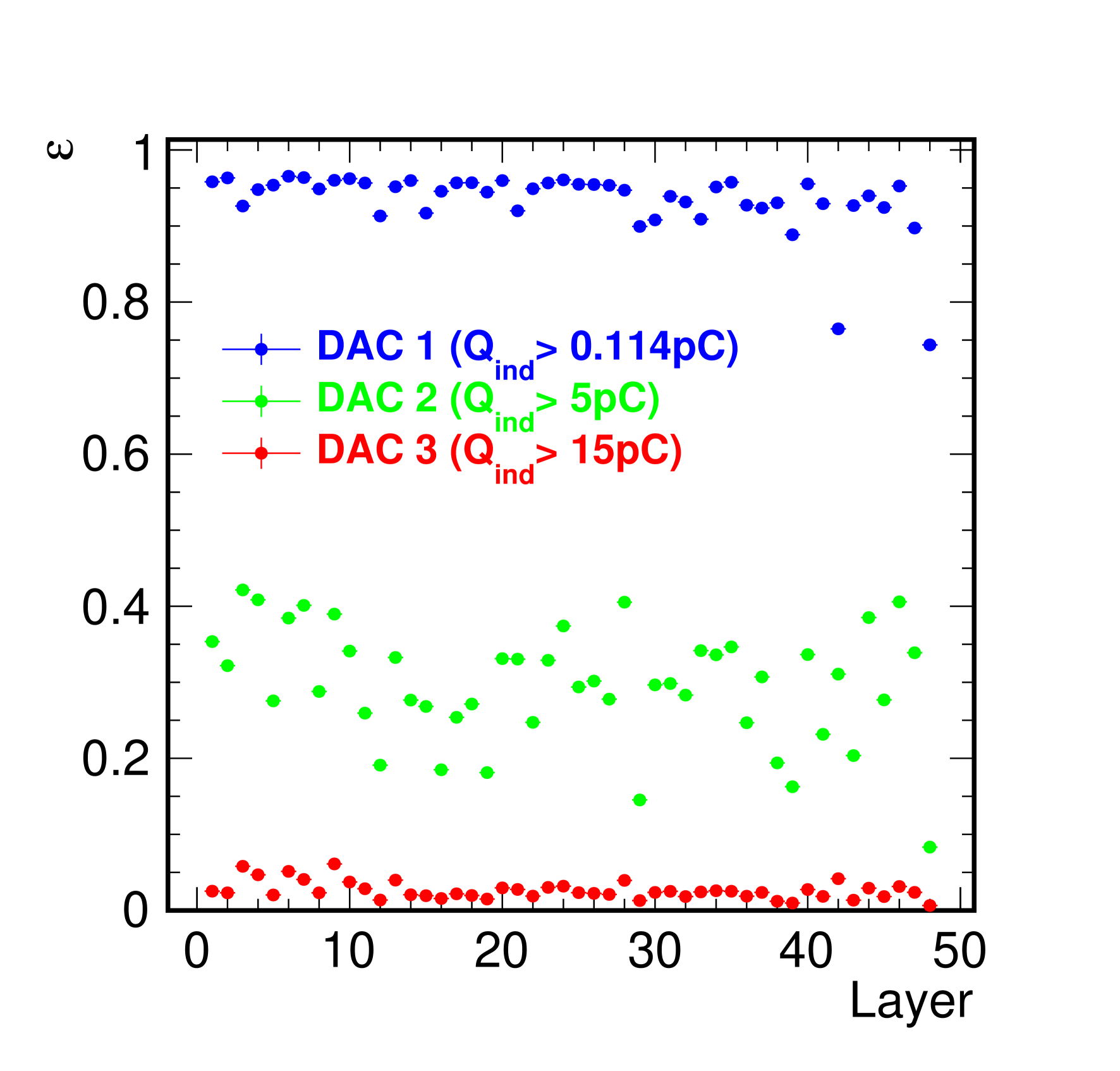

The SDHCAL's operation is characterised by its three-threshold (semi-digital) readout system. The thresholds are designed to distinguish pads crossed by varying numbers of charged particles, enhancing hadronic shower energy measurements. The HARDROC ASICs used in the readout process auto-trigger and store data, ensuring detailed tracking and efficient data acquisition. The prototype was extensively tested at CERN's Super Proton Synchrotron (SPS) with different beams (pions, muons, and electrons) to evaluate its performance under varied conditions. These test beams were crucial for assessing the SDHCAL's efficiency, linearity, and response to hadronic showers.

The findings in [1] from these tests demonstrated the SDHCAL's high efficiency and capability for detailed particle tracking. The prototype showed a linear response to different particle types and energies. The results from the SDHCAL prototype testing revealed significant insights into its performance and capabilities. Advanced methods were employed to analyse the data, including using fractal dimension as a novel approach to differentiate between electromagnetic and hadronic showers. For example, additional variables, such as fractal dimensions, were introduced to reduce electron contamination in the hadronic samples. The determination of this quantity involved the box-counting algorithm, which counts the number of hits while varying the virtual cell size, helping to distinguish between different types of particle showers based on their self-similarity. This method proved effective in refining the selection of hadronic showers, thereby enhancing the accuracy of the energy resolution studies.

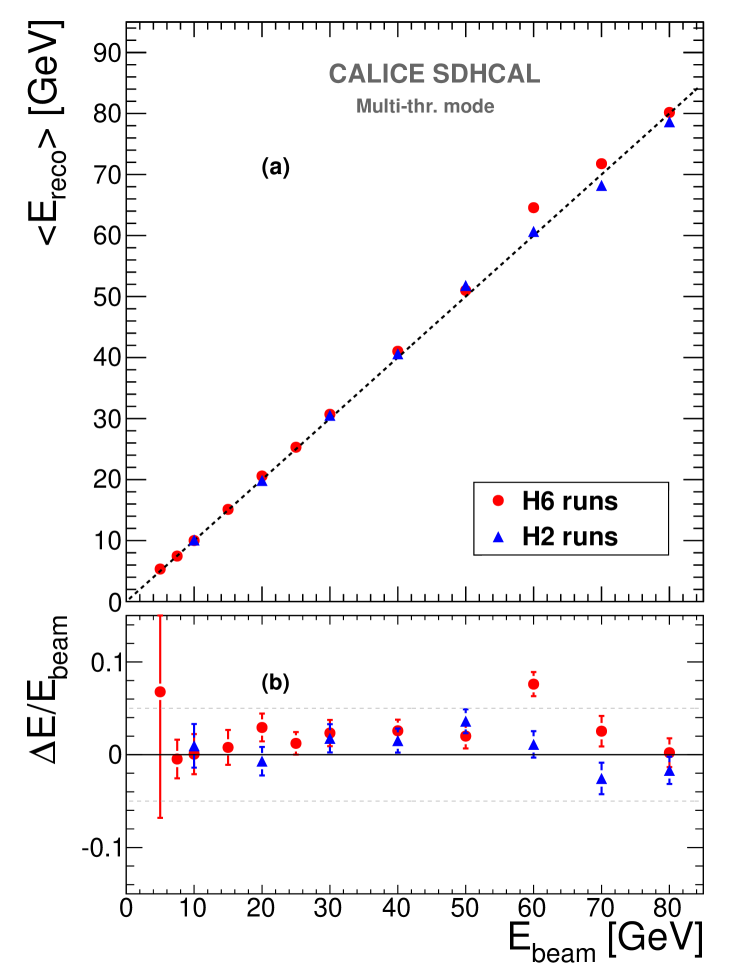

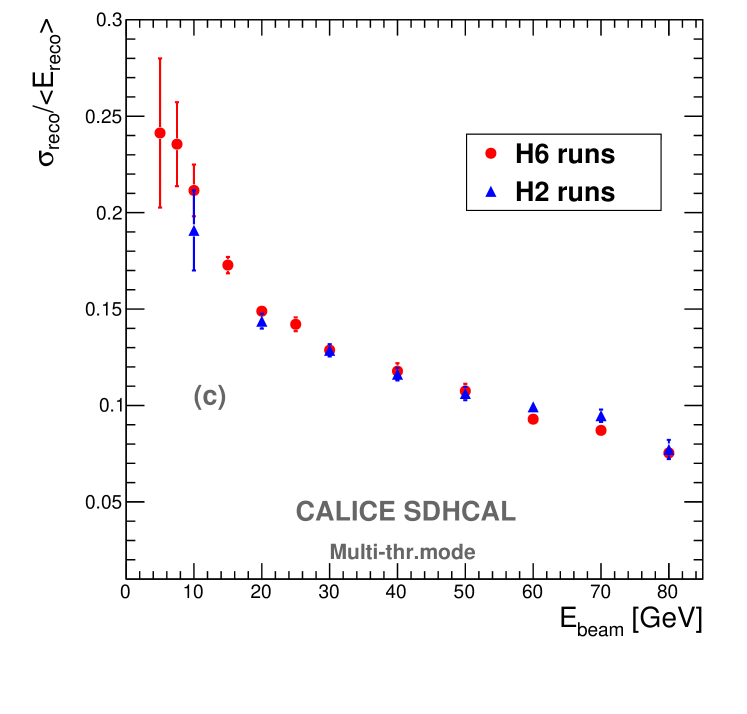

Mean reconstructed energy for pion showers as a function of the beam energy (a) of the 2012 H2 (blue) and the 2012 H6 (red) data. The dashed line passes through the origin with unit gradient. Relative deviation of the pion mean reconstructed energy with respect to the beam energy as a function of the beam energy (b) of the 2012 H2 (blue) and the 2012 H6 (red) data. The reconstructed energy is computed using the three thresholds information as described in section 6.2. (c) is the relative resolution of the reconstructed hadron energy as a function of the beam energy of the 2012 H2 (blue) and the 2012 H6 (red) data.

The resolution associated to the linearized energy response of the same selected data sample is also estimated in both the binary and the multi-threshold modes. The multi-threshold capabilities of the SDHCAL clearly improve the resolution at high energy (>30 GeV). This relative improvement which reaches 30% at 80 GeV is probably related to a better treatment of the saturation effect thanks to the information provided by the second and third thresholds. The energy resolution reaches 7.7% at 80 GeV. The exploitation of the topological information provided by such a high-granularity calorimeter to account for saturation and leakage effects in an appropriate way as well as the application of an electronic gain correction to improve on the calorimeter response uniformity are likely to improve the hadronic energy estimation and should be investigated in future works.