As a technology enthusiast and a lover of efficiency, I've decided during this pandemic to building a Raspberry Pi cluster. My motivation? To create a robust home server to manage my movies, a self-hosted cloud storage, and, most importantly, my extensive collection of books using Calibre Web. Here's how I did it.

The Hardware

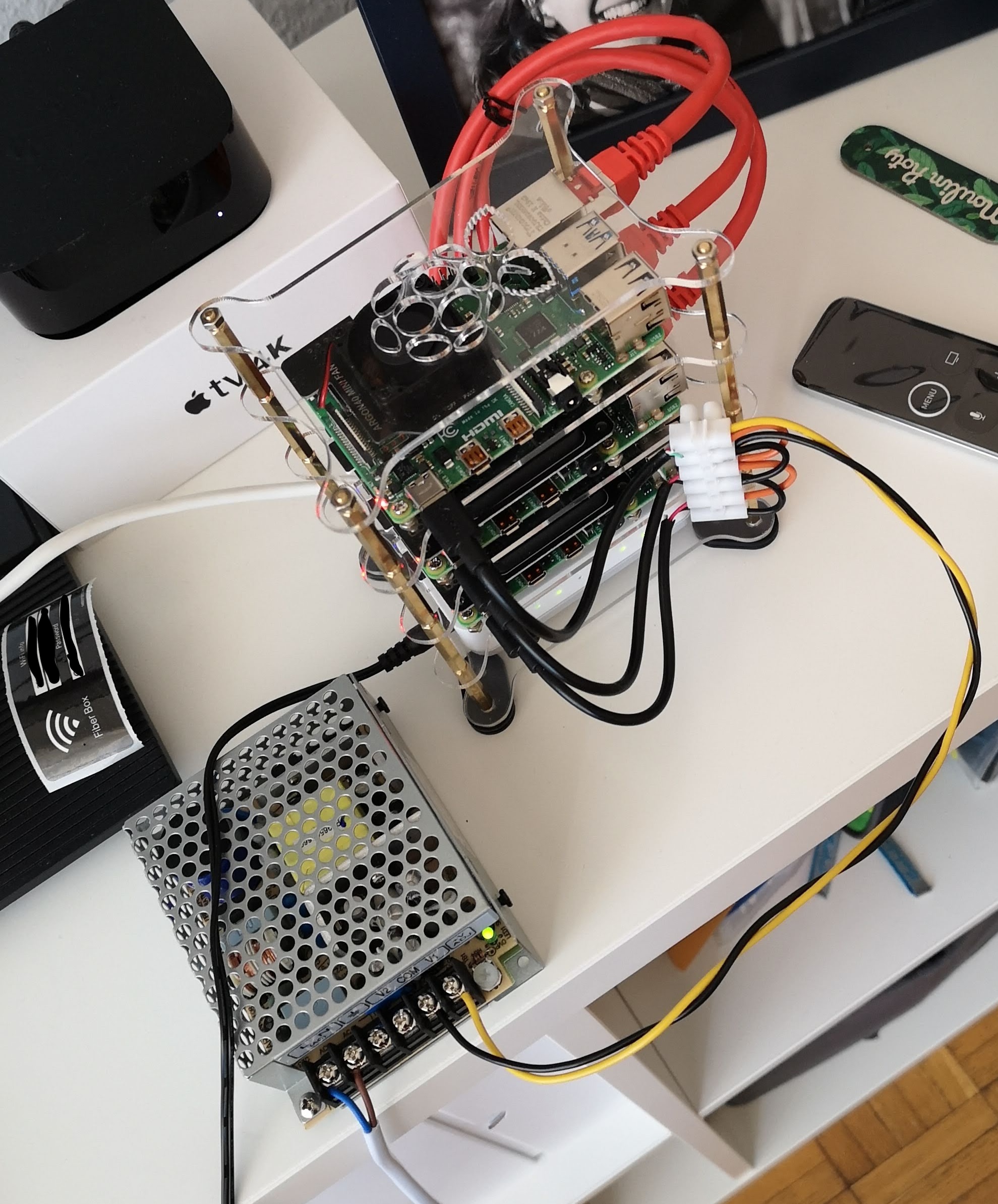

Raspberry Pi 4 boards are the heart of my project. Each board boasts 4 physical ARM cores with a base clock of 1.5GHz and up to 8GB of LPDDR4-3200 SDRAM - a compact powerhouse. For my build, I used:

- 1 Raspberry Pi 3B (2GB RAM) as a DNS server.

- 4 Raspberry Pi 4B (4GB RAM each) as cluster nodes.

- A small 5-port network switch.

- A USB hub to power the setup.

As of writing this, I've installed Ubuntu Server 21.04 on all nodes. The manager node is equipped with a 64GB micro SD card, while the worker nodes each have 32GB. This setup should adeptly handle the services I plan to run. Additionally, I'm attaching external USB drives for extra storage, essential for hosting the data used in my services.

Why Swarm Over Kubernetes?

While Kubernetes is a popular choice, I opted for Swarm due to its simplicity and reduced configuration needs. It aligns with my belief that a respected physicist (and hobbyist programmer) should optimize efforts. Nevertheless, I'm open to exploring Kubernetes in the future for a well-rounded experience.

Initial configuration: Names and Networks

Configuring my Raspberry Pis involved renaming each node for easy identification - node-01, node-02, etc. This was done by editing /etc/hostname and /etc/hosts, linking each hostname to its IP address. A crucial step was setting up my local DNS server, located at 192.168.1.35, to ensure smooth network communication.

Each Raspberry Pi needed a unique identity for ease of management and communication. I decided on a straightforward naming convention: node-01, node-02, node-03, and so on. This simple approach ensures clarity, especially when scaling up or troubleshooting.

- Editing Hostnames: I began by editing the

/etc/hostnamefile on each Pi. This file contains the default name, which I changed according to my naming scheme. This step was crucial for identifying each node individually. - Updating Hosts File: Next, I modified the

/etc/hostsfile. Here, I linked each new hostname to its corresponding IP address. This link is vital for the network to correctly identify and route to each node.

192.168.1.30 node-01 node-01.home.dev

192.168.1.31 node-02 node-02.home.dev

192.168.1.32 node-03 node-03.home.devYou will notice that I have included as well the name of my home domain and all the other nodes so I can easily connect between nodes if needed.

My network's efficiency hinges on seamless communication between nodes. To achieve this, I designated one of my Raspberry Pis as the local DNS server. This server, at 192.168.1.35, acts as the network's address book, directing traffic accurately and efficiently.

- DNS Configuration: On each node, I configured the network settings to recognize the local DNS server. This setup means that whenever a node needs to communicate with another, it consults the DNS server to find the correct IP address, streamlining internal network communication.

- Benefits of a Local DNS: Using a local DNS server enhances the speed and reliability of network communication. It also adds a layer of customization, allowing me to manage network traffic more effectively, crucial for optimizing the performance of my web applications.

With the nodes named and the DNS server in place, the next step was to reboot each Raspberry Pi to apply these changes. Post-reboot, I tested the connectivity between the nodes. This test ensured that each node could communicate with the others using their new names, a critical step in validating my configuration.

To streamline operations, I set up SSH keys for passwordless connections between nodes. This is a simple yet effective way to enhance security and efficiency. For that you can just go to the first node and run the folloing commands

ssh-keygen -t rsa

ssh-copy-id ubuntu@node-01

ssh-copy-id ubuntu@node-02

ssh-copy-id ubuntu@node-03What we do here is to generate an ssh key and copy it over each node and assures a passwrodless connection. Note that you will need to leave the passphase empty.

Installing Docker and Docker-Compose

I have found various recipes to installing docker in ubuntu. But with the version 21.04 we can simply install it via the apptitude package manager. This is a simple as it can get, we can simply run a loop over all our nodes, and install docker on all of them:

for host in node-01 node-02 node-03; do

echo " == installing docker on $host == ";

ssh ubuntu@$host "sudo apt -y install docker.io";

doneNow let install docker-compose. This is a tool that will let me define and manage my infrastructure using YAML configuration files. It's especially useful when your application requires multiple interdependent services.

sudo pip3 -v install docker-composebefore doing this command, I had to install

python3andpython3-pipthat were not installed by default in the ubuntu server version I used.

Building a Resilient Network using GlusterFS

GlusterFS, a scalable network filesystem, was my choice to create a shared, resilient storage system across the nodes. GlusterFS's beauty lies in its ability to turn standalone storage into a distributed, fault-tolerant system. I installed GlusterFS on each node, laying the foundation for shared storage.

On each Raspberry Pi running Ubuntu 22.04, I executed the following commands to install GlusterFS:

sudo apt install software-properties-common -y &&

sudo apt install glusterfs-server -yWith GlusterFS installed, the next step was to start and enable the service on each node:

sudo systemctl start glusterd

sudo systemctl enable glusterdAfter installation, the nodes needed to be interconnected to function as a single storage system. On the master node, I added the other nodes into the GlusterFS trusted storage pool:

sudo gluster peer probe node-02

sudo gluster peer probe node-03To confirm that the nodes were successfully connected, I checked the peer status. The output should showed a list of nodes with their respective states, confirming a successful connection:

UUID Hostname State

3f035204-abef-4380-a973-5f6c0ade1b34 node-02 Connected

43766303-28d4-4855-9d5a-00664f6178d2 node-03 Connected

89b9fdc7-336c-4966-b0e1-1d640e4e3f3f localhost Connected

The core of GlusterFS is its volume - the logical collection of bricks (storage directories on nodes). Let's create a shared storage directory: On each node, I created a directory designated as a storage brick for GlusterFS:

sudo mkdir -p /gluster/volume1Back on the master node, I created a replicated GlusterFS volume across the nodes:

sudo gluster volume create staging-gfs replica 3 node-01:/gluster/volume1 node-02:/gluster/volume1 node-03:/gluster/volume1 forceThis command creates a volume named staging-gfs with a replica count of 3, ensuring data is replicated across three nodes for redundancy. To make the volume available for use, Lets start the volume:

sudo gluster volume start staging-gfsThe final step was to mount the GlusterFS volume on each node, making the shared storage accessible. This can be done by creating a mount point on each node:

sudo mkdir /mnt/brick0To ensure the volume remains mounted across reboots, I added the following entry to /etc/fstab on each node:

node-01:/staging-gfs /mnt/brick0 glusterfs defaults,_netdev,backupvolfile-server=localhost 0 0

Finally, I mounted the volume using sudo mount -a. To confirm the mount, we can run df -h, which showed the GlusterFS volume and its usage details.

To test the resilience of my GlusterFS setup, I created a file in the /mnt/brick0 directory on one node and verified its presence in the /gluster/volume1 directory on all nodes. This test confirmed that data written to one node was replicated across the cluster, ensuring data availability and redundancy.

Traefik: Managing Traffic

Managing the traffic between various containers is the next step. Traefik, a modern reverse proxy and load balancer, was my choice to simplify and streamline this process. Here's how I integrated Traefik into my Raspberry Pi cluster.

First lets setup Traefik which will play here the role of the gateway for our containers. Traefik simplifies the routing of requests to various containers, acting as a gatekeeper that ensures smooth and secure traffic flow.

Creating the Traefik Configuration: I started by creating a traefik.toml configuration file. This file defines the basic settings of Traefik, such as entry points and dashboard settings:

[entryPoints]

[entryPoints.web]

address = ":80"

[api]

dashboard = true

insecure = true

[providers.docker]

watch = true

network = "web"

exposedByDefault = falseThis configuration sets up an entry point for web traffic on port 80 and enables the Traefik dashboard. The Docker provider configuration ensures that Traefik watches for changes in Docker and connects to the specified network.

Creating the Docker Network: To allow communication between containers, I created an external network named homenet:

docker network create -d overlay homenet --scope swarmThis network enables containers to communicate across the Docker Swarm cluster.

Deploying Traefik with Docker Compose

I used Docker Compose to deploy Traefik, allowing me to define and run multi-container Docker applications. Below is the docker-compose.yml file used to set up Traefik:

version: "3.4"

services:

traefik:

image: "traefik:2.3"

container_name: "traefik"

restart: "unless-stopped"

ports:

- "80:80"

- "8080:8080"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock:ro"

- "./traefik/traefik.toml:/traefik.toml"

networks:

- homenet

whoami:

image: "traefik/whoami"

restart: "unless-stopped"

labels:

- "traefik.enable=true"

- "traefik.http.routers.whoami.rule=PathPrefix(`/whoami{regex:$$|/.*}`)"

- "traefik.http.services.whoami.loadbalancer.server.port=80"

networks:

- homenet

networks:

homenet:

external: trueThis configuration deploys Traefik and a simple 'whoami' service for testing. The whoami service provides a basic web page that shows the container's information, useful for verifying the setup.

Now, to deploy the Traefik service, I used the following command:

docker stack deploy --compose-file traefik.compose.yml traefikWith Traefik running, we can access its dashboard to monitor and manage the container traffic. The dashboard is accessible via http://node-01.home-network.io:8080. This dashboard offers a real-time overview of the cluster, routing rules, and the status of various services. Traefik's dynamic configuration and automatic service discovery made managing container traffic intuitive and efficient. Each request to my cluster was intelligently routed to the appropriate container, ensuring optimal performance of my home server applications.

Deploying Web Applications: Bringing Calibre-Web to Life

One of the primary motivations behind building my Raspberry Pi cluster was to efficiently run web applications like Calibre-Web. Calibre-Web is a web app providing an elegant interface to manage and view eBooks. Ler start with setting up Calibre-Web that will serve as a self-hosted digital library on my home network.

Calibre-Web brings the vast world of ebooks to my fingertips, organized and accessible from anywhere on my home network. The deployment process involved a Docker container, making it straightforward to set up and manage.

Docker Compose for Calibre-Web: To deploy Calibre-Web, I created a docker-compose.yml file specific to Calibre-Web:

version: '3.3'

services:

calibre-web:

image: linuxserver/calibre-web

container_name: calibre-web

environment:

- PUID=1000

- PGID=1000

- TZ=Europe/London

- DOCKER_MODS=linuxserver/calibre-web:calibre

volumes:

- /path/to/calibre/data:/config

- /path/to/books:/books

ports:

- '8083:8083'

restart: unless-stopped

networks:

- homenet

networks:

homenet:

external: true

In this configuration, I specified the LinuxServer's Calibre-Web image, set environmental variables (like user ID, group ID, and time zone), and defined volume mappings for configuration data and the eBooks directory. The service is exposed on port 8083.

- Environmental Variables: PUID and PGID are set for user permission management, while TZ sets the container's timezone.

- Volumes: The /config volume holds Calibre-Web's configuration files and database, while /books is where the actual eBook files are stored.

- Network Configuration: Calibre-Web is connected to the homenet network for easy communication with other services in the cluster.

To deploy Calibre-Web, I ran the following command in the directory containing the docker-compose.yml file:

docker-compose up -dThis command starts Calibre-Web in detached mode, running in the background. Once deployed, Calibre-Web was accessible through any web browser on my home network at http://node-01.home-network.io:8083. The first time you access Calibre-Web, it will ask you to point to the folder where your eBooks are stored (in this case, the /books directory).

Now to integrate Calibre-Web with Traefik for easy access and potential future SSL implementation, I added labels to the service in the docker-compose.yml file:

labels:

- "traefik.enable=true"

- "traefik.http.routers.calibre-web.rule=Host(`calibre-web.home-network.io`)"

- "traefik.http.services.calibre-web.loadbalancer.server.port=8083"These labels instruct Traefik to route traffic to the Calibre-Web container and expose it on a friendly URL.

In Conclusion

Building this Raspberry Pi cluster has been a journey of learning and experimentation. It's a project that blends my love for technology with practical applications, creating a system that not only serves my needs but also fuels my passion for innovation.

Further Reading

For those interested in delving deeper, here are some invaluable resources I found during my project: